Everyone wants to know which AI agent is the best, but the real question you should be asking in 2026 is: which one protects your runway? We are not looking for the smartest model in a vacuum; we are looking for the tool that solves the most coding problems per dollar spent.

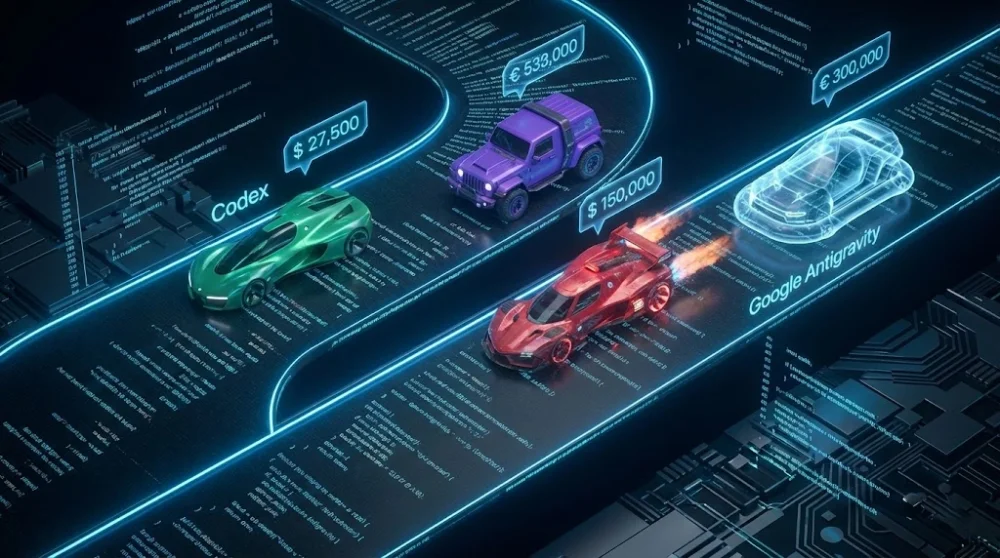

While Codex has long been the volume king and Cursor the UX favorite, a massive new contender has just entered the arena: Google Antigravity. With its Agent-First architecture and aggressive pricing strategy, it completely disrupts the math we used to do. If you are a casual builder or an entrepreneur, the answer might shift towards Windsurf or this new Google powerhouse depending on how you manage your prompts. Let’s break down the math, the token limits, and the hidden costs that most pricing pages don't tell you.

The Core Metrics: How We Calculated the True Cost

To determine the real value, we cannot just look at the monthly subscription fee. We have to look at Token Efficiency and Cost Per Problem.

Different platforms calculate consumption differently. Some use strict token counters, while others use credits or message caps. To standardize this, our calculation assumes a heavy user scenario: 15 hours of active usage per week. This helps us visualize how quickly you hit the wall with each service and whether you are forced to pay for extra credits just to finish your project.

Google Antigravity: The Free Wildcard (For Now)

If we are talking strictly about cost-efficiency, we cannot ignore the elephant in the room. Google Antigravity recently launched as an agent-first IDE, and right now, it is arguably the most dangerous competitor to paid plans.

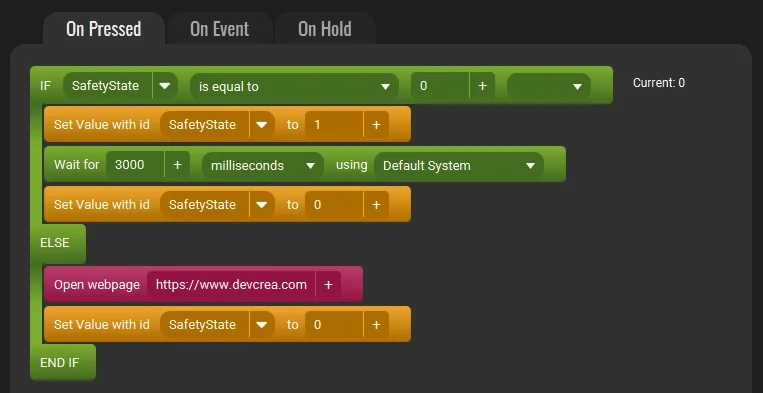

Unlike standard editors that just autocomplete code, Antigravity introduces a Manager View, a mission control where you direct autonomous agents to plan and execute tasks asynchronously.

- The Cost Advantage: Currently, in its public preview phase, Antigravity is free for personal use. It provides generous rate limits for Gemini 3 Pro and supports Claude Sonnet 3.5 via BYOK (Bring Your Own Key) or limited quotas.

- Why It Matters: If you can offload the heavy lifting—like scaffolding a new web development project or debugging a complex loop, to a free agent that generates Artifacts (verifiable deliverables) instead of burning through paid tokens, your cost per problem drops to zero.

- The Catch: It is still in preview. The free ride won't last forever, but for 2026, it is the absolute best way to start without opening your wallet.

Claude Code: The Premium Powerhouse (But at What Cost?)

Claude Code is often regarded as the gold standard in reasoning capabilities, especially with the Sonnet 3.5 model. However, its pricing structure is rigid.

The Pro Plan gives you approximately 44,000 tokens within a 5-hour window. If we stick to our 15-hour active usage model, that is roughly three windows, totaling 132,000 tokens per day.

There is also a weekly cap ranging from 40 to 80 hours depending on demand. This translates to a maximum theoretical limit of about 3 million to 4 million tokens per month.

While 4 million tokens sounds like a lot, you have to consider the Cost Per Problem. If you are using the best cost-per-problem model, you might solve issues faster, using fewer tokens overall. However, if you are on a budget, hitting that 5-hour window limit can be a productivity killer. For heavy builders, the basic plan often feels restrictive, pushing you toward the much more expensive 5x plan.

Codex (OpenAI): The Budget King?

Calculating Codex's limits is notoriously difficult because OpenAI does not transparently disclose token numbers. Instead, they use a message cap system.

You typically get 45 to 225 messages per 5-hour window. This wide range depends on complexity. If you are asking simple syntax questions, you get more. If you are asking the agent to scaffold a complex [software](https://www.devcrea.com/software) architecture using multiple tools, you will be capped closer to 45.

Based on community data and our 4-month stress test, this equates to roughly 3 to 5 million tokens per week. That gives us a massive monthly estimate of 13 to 21 million tokens.

Comparing the entry-level plans, Codex offers significantly more raw volume than Claude Code. Even though the performance might be slightly behind Claude's Opus models in complex reasoning, the sheer volume makes it the winner for developers who iterate constantly.

The Editor Battle: Cursor vs. Windsurf

This is where most developers get stuck. Both are IDEs (Integrated Development Environments) that integrate AI deeply, but their billing models are polar opposites, and Windsurf has recently tightened its belt.

Cursor’s API Credit System

Cursor is arguably the most user-friendly for beginners. It has a polished interface that makes starting to code feel less intimidating. However, its Pro plan essentially resells you API credits.

If you use a premium model like Claude Sonnet 3.5 inside Cursor, you are paying the API provider's rate (approx. $3 input / $15 output per million tokens). This burns through your budget fast. Our calculations show that using premium models in Cursor gives you only about 3.3 million tokens per month before you have to pay extra.

If you switch to a cheaper model (like a basic GPT-4o variant), you can stretch this to 6 million tokens, but you lose the reasoning quality. Also, be aware of region-specific restrictions; sometimes you might encounter errors like this model provider doesnt serve your region, which can be a hassle to configure around.

Windsurf’s New Credit Trap

Windsurf uses a credit system that initially looked simple (1 prompt = 1 credit), but they have introduced a complexity multiplier that you need to watch out for.

- Standard Models: 1 Credit per prompt.

- Premium Models (Sonnet 3.5): 2 Credits per prompt.

You get 500 credits per month on the Pro plan. If you are exclusively using the best model (Sonnet 3.5) for high-quality reasoning, you effectively only get 250 prompts per month.

This is a double-edged sword.

- The Good: You can still write a massive, complex prompt asking the AI to build an entire authentication flow, using 30,000 tokens of context, and it will only cost you 2 credits. This is highly efficient for power users who know how to prompt engineering.

- The Bad: If you treat it like a chat and ask 10 small questions like how do I center a div? using Sonnet 3.5, you just burned 20 credits for something a free model could have done.

For the casual builder, Windsurf requires more discipline now. You have to be strategic: use the cheaper Cascade Base models for simple chatter and save your Sonnet 3.5 credits for the heavy architectural lifting.

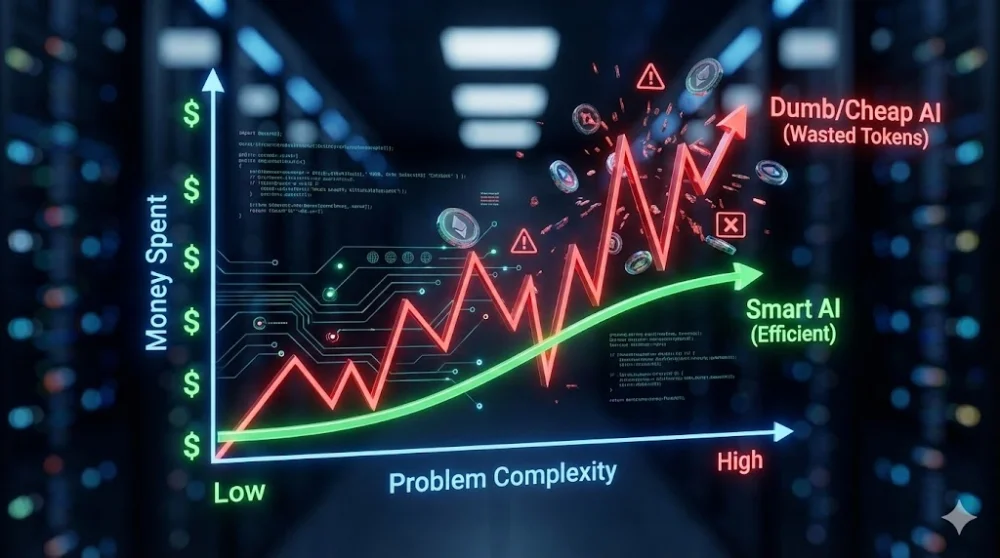

Performance vs. Cost: Are You Paying for Stupidity?

There is a concept called False Economy in AI coding. You might choose a cheaper agent or a cheaper model (like a basic GPT-4 mini) to save money. But if that model fails to solve the bug three times in a row, you have just wasted more tokens (and time) than if you had used the expensive model once.

- Cloud Code Pro allows you to solve about 2.0 complex problems per month on their budget plan.

- Cursor Pro (with Sonnet) allows roughly 1.6 problems.

- Codex, even without the absolute top-tier model, allows for 7.5 to 12.2 problems per month simply because the volume allowance is so high.

Even if Codex takes two tries to solve what Claude does in one, you have so much more token runway that it still ends up being cheaper per solution.

Final Verdict: Which AI Agent Should You Buy?

Your choice depends on your technical role and budget flexibility.

- For the Absolute Budget Seeker: Download Google Antigravity. It is free (for now), powerful, and offers a glimpse into the future of agentic coding without the monthly subscription anxiety.

- For the Power User / Volume Coder: Stick with Codex. The 13-21 million token limit is unbeatable for the price. It allows you to iterate, refactor, and debug without constantly watching a usage meter.

- For the Strategic Builder: Go with Windsurf, but be careful. If you learn to batch your requests into larger, complex prompts, you extract massive value from those 500 credits. Just don't waste your Sonnet credits on hello world questions.

- For the UI/UX Focused Newbie: Cursor is still the king of experience. If you are willing to pay a premium for the smoothest workflow and best autocomplete features, it is worth it. Just keep an eye on those API usage stats.

If you eventually turn pro and budget is less of a concern, the Claude Code 5x plan is the ultimate productivity unlocked, but for strict cost-efficiency in 2026, the battle is currently between Codex's volume and Antigravity's free tier.

Comments (0)

Sign in to comment

Report